Building a Q&A app with LaunchPad Studio

Motivation

The Quick Start example shows a quick method to build a simple Q&A flow. However, it is not a production-ready solution.

In a typical setting with large number of documents and with many end users asking different questions on the same set of data, you would want to:

- Use a vector store to store the embeddings of the data. This allows reuse of the embeddings for different questions.

- Use a vector retriever to retrieve the most relevant documents. This allows you to scale to large number of documents.

- Only then will you use a language model to answer the question based on the relevant documents.

The above process is called Retrieval-Augmented Generation. Refer to this blog post for more details.

Sample flows

In this example, we will:

- Create a Vector Store Management flow that loads data from a documentation website and stores its content in a Qdrant vector store (Qdrant is one of the vector store providers, similar to Oracle and MySQL for relational databases).

- Create a Question Answering flow that answers questions on the documentation using LLM.

Conceptually, the flow diagrams look like these:

Vector Store Management flow

Question Answering flow

Let's go step by step:

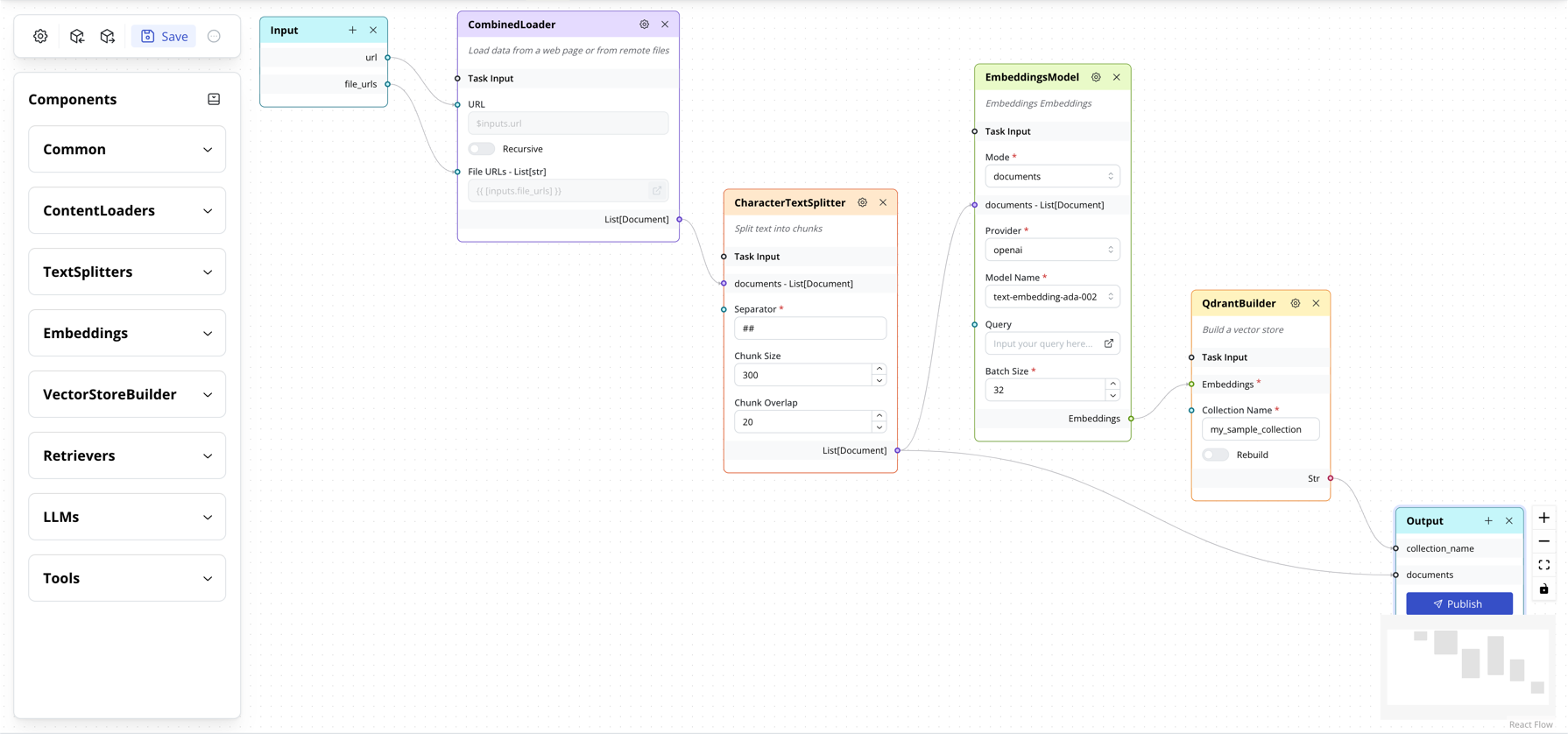

A. Vector Store Management flow

I. Create the Flow

- Start at LaunchPad Studio

-

Click on Sample Vector Store Management Flow template

Note

If you are not able to open the template, you can download this JSON file and import it into LaunchPad Studio to get the same flow.

-

The Studio will load the full flow as shown below.

As you can see, it follows this sequence:

-

These are the tasks you will find in the flow:

CombinedLoader: A content loader that combines theWebLoaderandRemoteFileLoadertasks. It can load data from a website and remote files.CharacterTextSplitter: A text splitter that uses a single separator to convert documents into smaller chunks of text. This is required to keep chunks of text within the maximum token length of the embeddings model.EmbeddingsModel: Embeds the text chunks into vectors. This uses embeddings model such as OpenAI Ada v2.QdrantBuilder: A vector store builder which stores the vectors in a Qdrant vector store, for retrieval later.

II. Customize the Flow

-

CharacterTextSplitter:- Update the

separatorto a value of your choice. - Note that loading from a webpage has the following behavior:

h1,h2,h3tags are converted to#,##,###respectively. So one of these can be used as separator. In future release, we will add other ways to split a website content. - For documents, you have to determine your own separator based on the content. For example, if you have a document with multiple Chapters with

Chapter 1,Chapter 2, ..., you can use\nChapteras separator.

Note: this is a wrapper on top of LangChain's CharacterTextSplitter.

- Update the

-

QdrantBuilder:- Update the

collection_nameto a value of your choice. You can use different collection names to logically separate your data. The name is unique to your account, it is not shared with other users. - Turn on

rebuildflag if you want the collection to be recreated everytime the flow is run. This is useful for initial testing so that you can have a clean set of data after every run.

- Update the

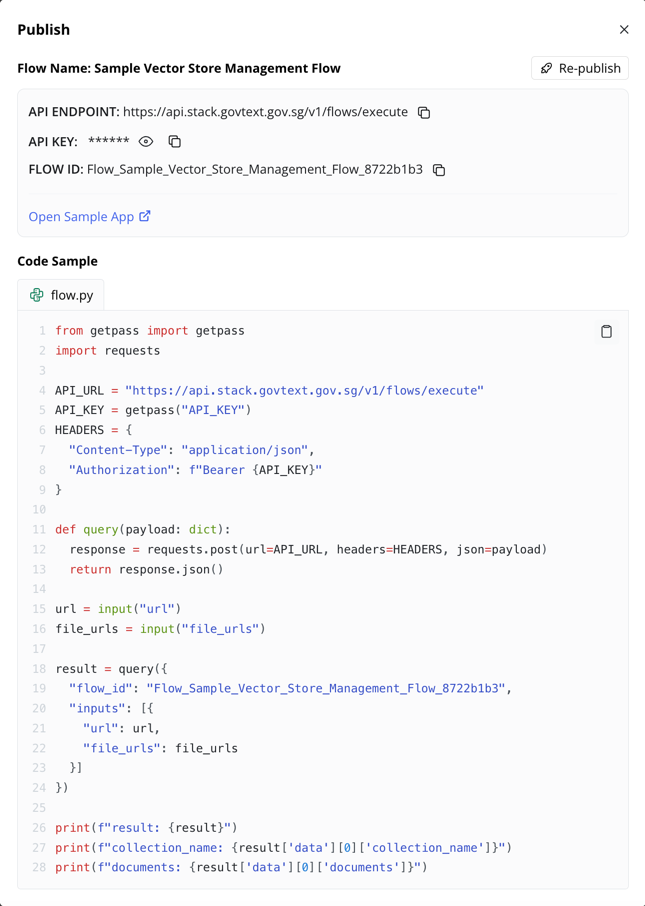

III. Publish the Flow

The flow is complete and is now ready to be published.

- Click on the Publish button in the Output block. A new Publish dialog appears with a loading icon.

- A confirm dialog box is shown. Here you can set the title and description of the Sample App as desired if you wish to test this flow using our Sample App.

- Wait for a few seconds. The full instructions will appear:

- Take note of:

- API Endpoint, API Key, Flow ID: you can use these in your own app. An example in Python is shown.

- Sample App: Click Open Sample App to open the sample demo app based on this Flow Type (i.e.,

VectorStoreManagement). This is only available for flow types which we have built demo apps.

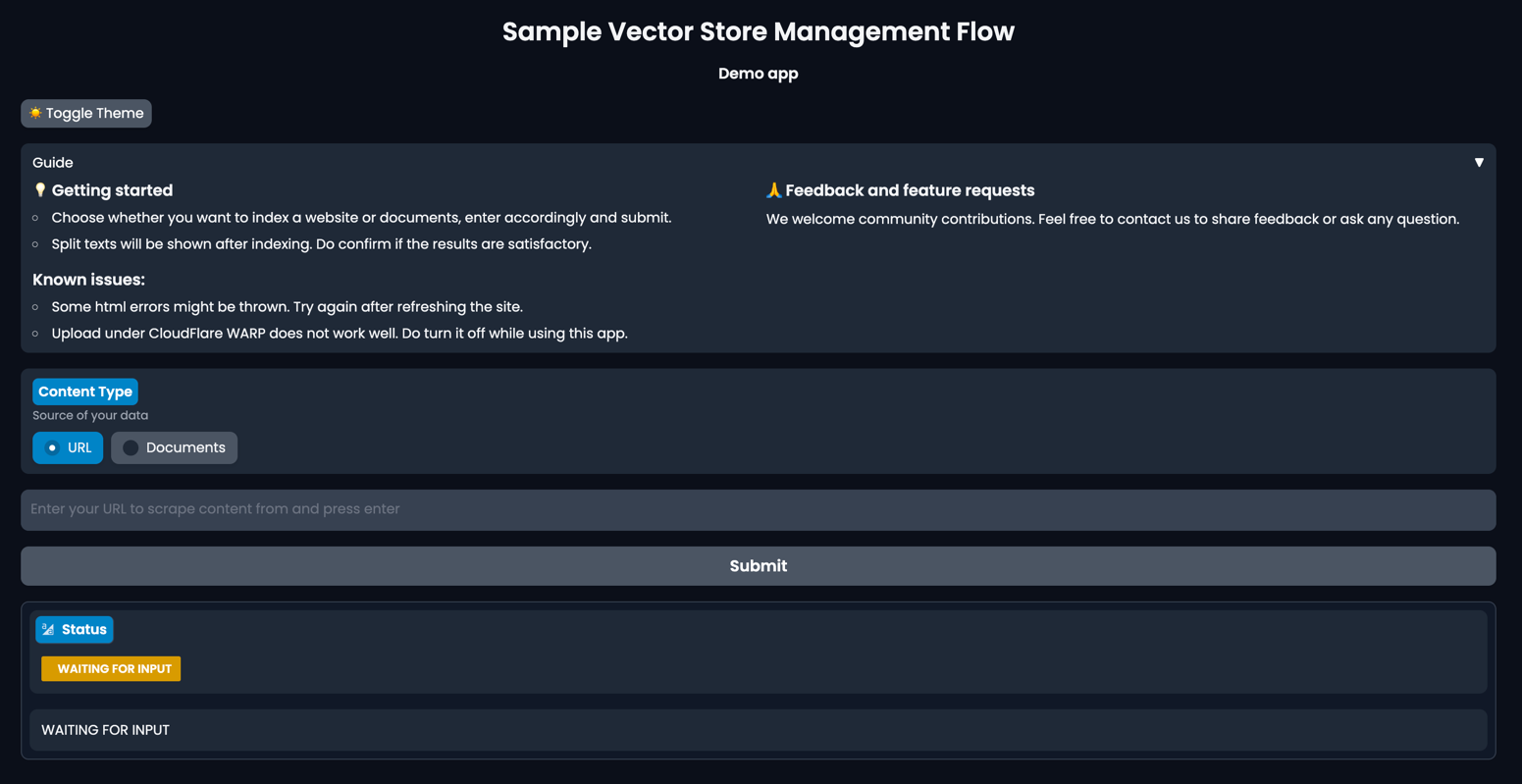

IV. Using the Flow to add data to Vector Store

There are 2 ways to do this:

-

Use the API access and invoke the flow wih

urlandfile_urlsparameters set accordingly (seturlto empty string andfile_urlsto empty list if you don't use either of them). -

Use the sample app that we have built for demo purpose. You will see the following screen, follow the provided instruction:

-

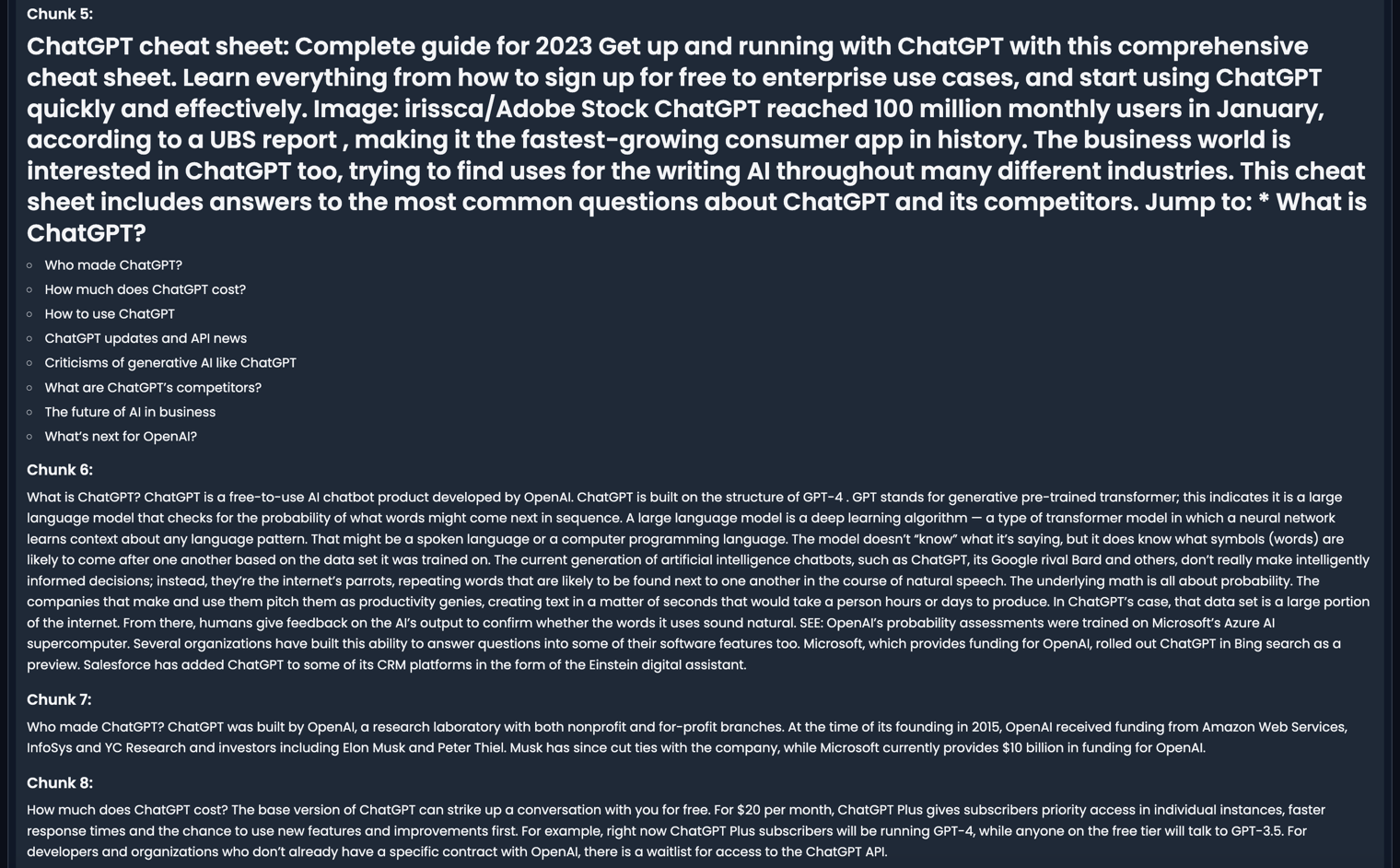

For example, when this website https://www.techrepublic.com/article/chatgpt-cheat-sheet/ is indexed, the page will show the resulting split texts:

Now that we've covered the Vector Store Management flow, let's move on to the Question Answering flow.

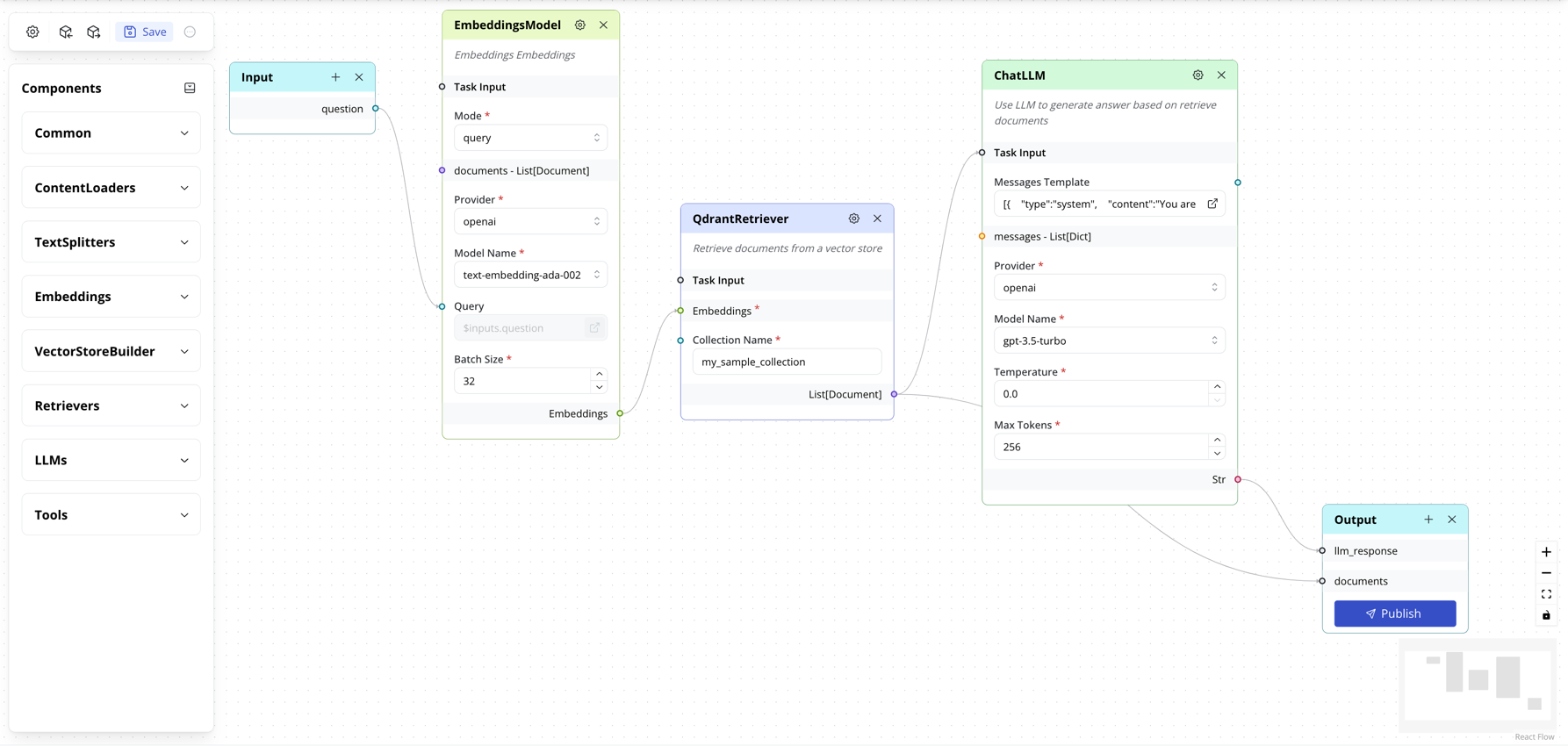

B. Question Answering flow

I. Create the Flow

- Start at LaunchPad Studio

-

Click on Sample Vector Store Management Flow template

Note

If you are not able to open the template, you can download this JSON file and import it into LaunchPad Studio to get the same flow.

-

The Studio will load the full flow as shown below.

As you can see, it follows this sequence:

-

These are the tasks you will find in the flow:

EmbeddingsModel: Converts the user question into a vector representation, for similarity search using theQdrantRetrievertask.QdrantRetriever: A vector store retriever that uses the converted vector to search for similar vectors in the Qdrant vector store built by the Vector Store Management flow above. The most related documents are returned.ChatLLM: Use the related documents to provide context to the LLM model, which then generates the answer to the user question.

II. Customize the Flow

-

QdrantRetriever:- Ensure the

collection_namematches the name set in theQdrantBuilderabove.

- Ensure the

-

ChatLLM:- Update the

Message Template, the following has been provided for reference, notice there's an example included:

[{ "type":"system", "content":"You are a Question Answering Bot. You can provide questions based on given context.\nIf you don't know the answer, just say that you don't know. Don't try to make up an answer.\nAlways include sources the answer in the format: 'Source: source1' or 'Sources: source1 source2'.\n\nContext:\n===\nTerminator: A cyborg assassin is sent back in time to eliminate the mother of a future resistance leader, highlighting the threat of AI dominance.\n===\nHer: A lonely writer falls in love with an intelligent operating system, raising existential questions about love and human connection.\n===\nEx-Machina: A programmer tests the capabilities of an alluring AI, leading to an unsettling exploration of consciousness and manipulation.\n===\nQuestion: What happens to the writer?\nThe writer develops a deep emotional connection with an intelligent operating system, but experiences heartbreak and existential questioning\nSource: Her\n\n" },{ "type":"user", "content":"Context:\n{%- for doc in QdrantRetriever.output %}\n{{ doc.id }}: {{ doc.content }}\n===\n{%- endfor %}\n===\nQuestion: {{ inputs.question }}" }] - Update the

III. Publish the Flow

Similar to the Vector Store Management flow abvoe.

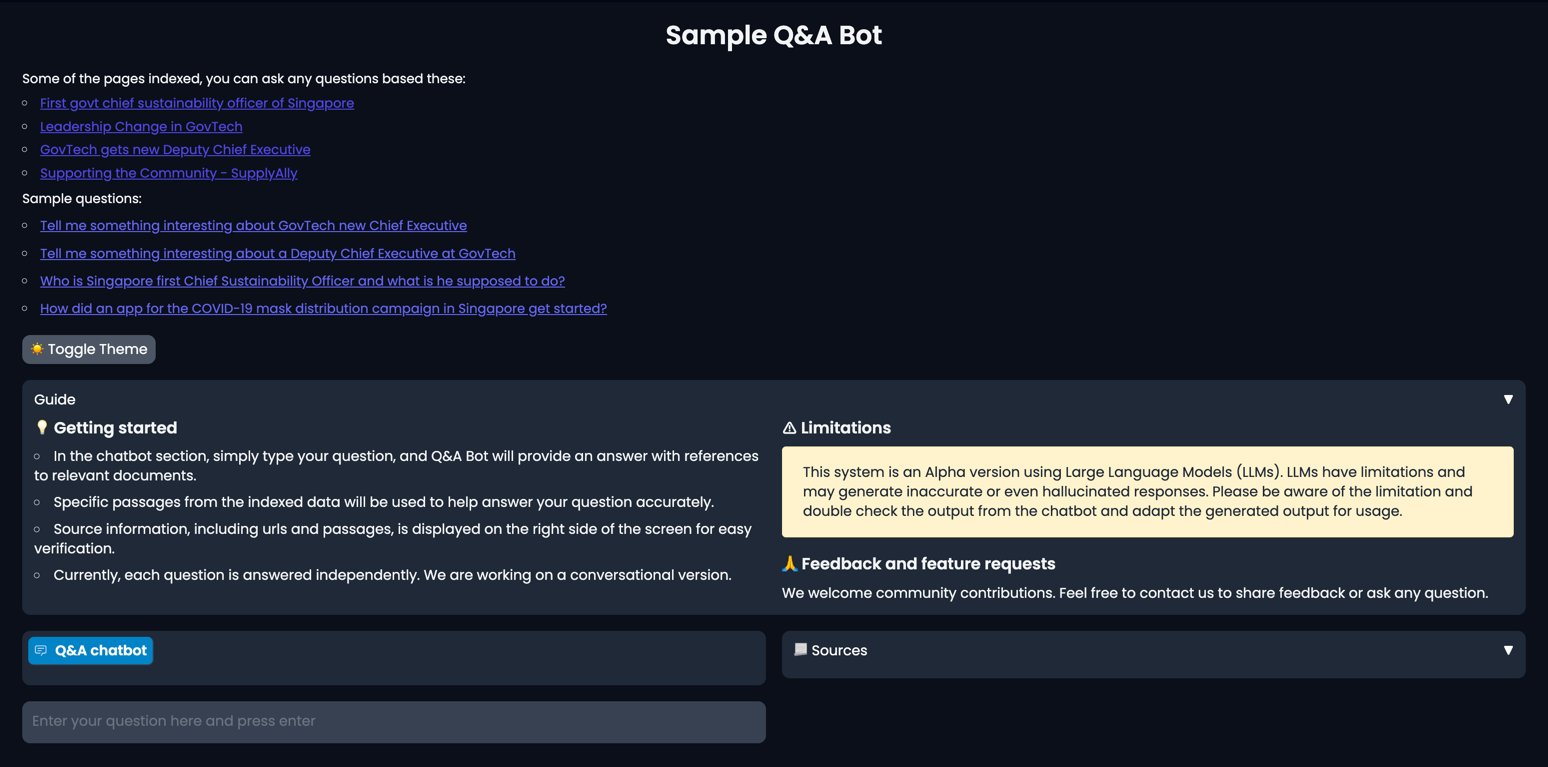

IV. Using the Flow to ask question on the indexed data

There are 2 ways to do this:

-

Use the API access and invoke the flow wih

questionparameter set accordingly (seturlto empty string andfile_urlsto empty list if you don't use either of them). -

Use the sample app that we have built for demo purpose. You will see the following screen, follow the provided instruction:

-

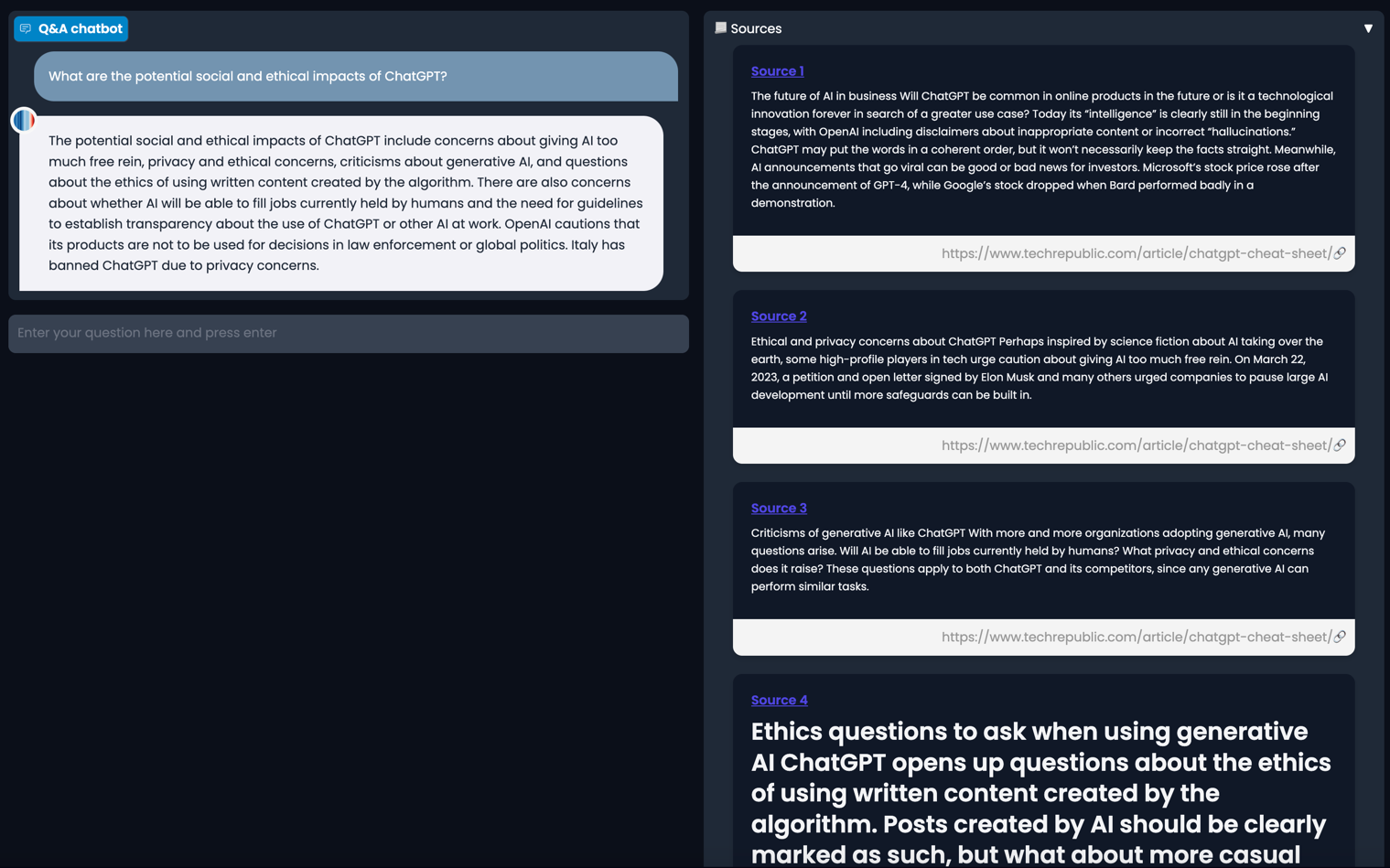

For example, if you have indexed the site https://www.techrepublic.com/article/chatgpt-cheat-sheet/ as suggested in Vector Store Management flow, when the question

What are the potential social and ethical impacts of ChatGPT?is asked, the page will show the resulting answer as well as the references to the chunks used:

VII. Next steps

You've seen how an End-to-end Q&A example is built. You can now try to build your own flows using the LaunchPad Studio or check out More Examples page.